Growing Up with Artificial Intelligence

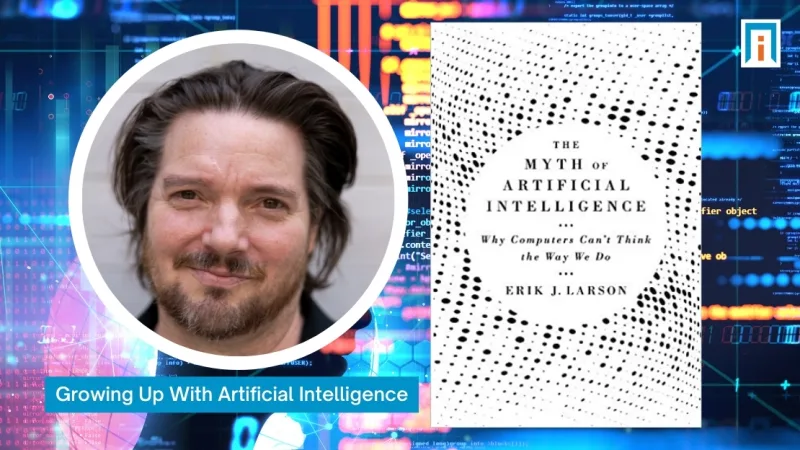

Erik J. Larson is a tech entrepreneur and pioneering research scientist who has been immersed in the world of artificial intelligence, natural language processing, and machine learning for decades. From his vantage point, magical thinking and complacency are stifling true innovation in AI and machine learning.

In his newest book from Harvard University Press, The Myth of Artificial Intelligence, Erik Larson warns that in its current form, artificial intelligence is deeply limited. If our goal is to advance toward artficial general intelligence (a type of computational processing that can make inferences and emulate intuitive human reasoning) Larson reports that we have a long way to go. In fact, says Larson, predictions about a bold future that are not necessarily borne out by reality are clouding the AI field. The author worries that an overhyped sense of achievement and unrealistic expectations of emergent technology will stifle scientific innovation, preventing us from realizing AI’s true potential.

Below, Larson outlines his concerns about the future of AI while sharing highlights from his background in the field. Because machine learning plays an important part in what we do at Influence Networks, we connected with Larson to discuss his book in further detail. Check out the pair of interviews below, or read on as Larson discusses the inspiration behind his new book!

Interview: What the Future Holds for Artificial Intelligence

Interview: The Inspiration for Academic Influence

The Winter of Artificial Intelligence

I started working in the field of artificial intelligence over two decades ago. At that time, in 2000, the web wasn’t mainstream yet, and talk in my first company focused on trying to overcome the brittleness of what we called shallow

machine learning and statistical approaches in the field.

Back in the 2000s, AI was just emerging from one of its notorious winters. With much help from the media, leaders in AI had over-promised and under-delivered. I first used the tech in 2000 — but already there was a monster in the closet, ominously grumbling and pushing its way through the door: Google.

Google founders Larry Page and Sergey Brin were two Stanford University graduate students who were largely unknown except to venture capitalists and a small but fast-growing user base. Page and Brin didn’t call the Google technology AI,

(or at least, it wasn’t a major part of their pitch). However, they did make the point that it worked. Google went on to become the lynchpin in what would become modern artificial intelligence — and modern AI was all about big data, which the exploding World Wide Web would soon provide.

My company tried (and failed) to build a search engine using the old techniques, called knowledge representation and reasoning.

But by the time I left the company in 2001, it had already become obvious to that online search was the domain of data and machine learning algorithms — not knowledge and reasoning.

Upon leaving that company, I joined the crowd — or rode the wave. I started working on machine learning.

Prophesying Super-Smart AI

Futurists are always, almost admirably, immune to realities on the ground.” – @ErikJLarsen

One of the constants in AI has been the presence of always-vocal futurists foretelling the coming of smart machines. Die-hard futurists like Ray Kurzweil (now Director of Engineering at Google) remained sanguinely optimistic about the arrival of true AI even in the coldest winters. Kurzweil published his widely-read book, The Age of Spiritual Machines (the title says it all), in 1998. In that pre-web era, AI was decidedly stagnant. Serious researchers didn’t even use the term AI

much; it was safer and sounded more scientifically respectable to discuss specific approaches and algorithms, like hidden markov models,

or maximum entropy.

But futurists are always, almost admirably, immune to realities on the ground.

Even doomsayers supported futurist claims by agreeing that problems with super smart AI were just around the corner. Most notoriously, Bill Joy , former CTO of now-defunct Sun Microsystem (which created the hugely popular Java programming language) penned a warning to the world in Wired magazie at the turn of the century. Joy’s article entirely ignored AI’s past failures and other 1990s disappointments in the field. (See Why the Future Doesn’t Need Us.

)

This constant drumbeat of futurists of any stripe bothered me. But as the web continued to grow, the once shallow

AI technologies like machine learning began showing promise. I adjusted my skeptical views about futuristic AI (or put them aside), and focused on creating of new technologies and new companies delivering value with it.

Web 2.0

This was an exciting time for me. By 2005, a whole host of time-worn but now seemingly new ideas flourished in web culture. Web 2.0 was born, giving us blogs, status updates, and other user-generated content (which, in turn, all became big tech a few years later). Communal, open-source projects like Wikipedia encouraged the view that crowds were smarter or wiser (a view informing the title of a best-selling book by James Sureweiki in 2005, The Wisdom of Crowds). Other pundits and culture critics announced the coming of a supercharged creative era online where, instead of watching TV, web denizens would unite on collaborative projects to remake the world with creative expression and culture.

AI Spring

That happened, in a way, but the downward turn came a few years later. It was AI, actually, but this time in a bright spring. In short, AI talk was back by the end of the decade. Not only was it back — it was on steroids. It seemed like everyone was now a futurist. I remember this turn troubling me, even though it was an exciting time to be an AI scientist. All the Web 2.0 ideas about human innovation, collaboratiom, and flourishing culture began disappearing in the wake of enthusiasm over machines.

AI is necessary for the web (and so, really, for our modern lives). Spam filters use a kind of AI (machine learning), and they perform a valuable service. Content personalization is made possible by big data and AI. And AI streamlines manufacturing and optimizes supply chains for large retailers like Walmart. There’s nothing wrong with this kind of AI; it powers the modern world.

Frustratingly, though, by 2010 we could already see that AI wasn’t getting smarter in any meaningful way. It was helping us manage a data deluge that we had generated ourselves.

The futurists, of course, seized on the largely commercial success of data-driven AI like machine learning to proclaim (yet again) the imminent arrival of smart machines. In 2012, when the convolutional neural network Alex Net blew away the competition at the ImageNet Large Scale Visual Recognition Challenge (a well-known image classification contest), we had a bona fide useful innovation in AI. We could claim that we had seen the last AI winter, given how widely businesses had begun to adopt these systems.

But What about Thinking Machines?

But, to me, nothing much had changed since 2000 on the core question of whether machines could think. Google set in motion an idea that proved immensely useful and powerful for practical AI: that data and human trust signals like HTML links to web pages provide an ideal dataset for commercial AI development. The rest of the tech world in Silicon Valley and everywhere else (I was in Austin, Texas, another hotspot) followed suit, ushering in a new era of practical AI. Older methods were retired (though traditional knowledge-based methods are reappearing today in hybrid systems development).

But nothing else really changed. The futurists were still, by my lights, talking a kind of technobabble. And nothing coming out of Google, Facebook, other big tech companies, research labs, or even the government really underwrote the hype.

Artificial Intelligence’s Core Myth

So, I decided to write my book, The Myth of Artificial Intelligence. I figured that readers should know about the difference between commercial and practical AI as well as the sort of general intelligence that futurists insist will soon emerge: from steady progress in practical and commercial applications all the way up to the development of true AI,

or artificial general intellignce. The key point is devastating: there isn’t a path from one to the other. Narrow (usable) AI isn’t a foundation for general artificial intelligence. General AI may still be possible, of course, but we can’t get there riding a wave of machine learning or, for that matter. any of the other traditional, now retired methods.

The Inevitability of Inferring Machines?

There’s actually a question mark at the center of AI; the futurists’ claims are based on a kind of wishful thinking that this isn’t so. Futurism and hype about AI, in other words, is a misrepresentation of facts that demands response. In the interest of providing such a response, my book focused on the core question of claims about machine intelligence. I ignore more philosophical ideas about machines coming alive

or having conscious experience, and ask hard questions about how computational systems are made to think.

This is a question about what’s called inference, a multifaceted word in the English language. What is an interence? For the purposes of addressing the AI question, an inference is a conclusion based on what you know and what you see around you. The inference question bears directly on whether, say, a self-driving car can ever understand what it sees

on the road ahead (and act accordingly). It bears on the question of whether Alexa can understand

the words you speak. This question probes whether a Roomba® could ever become Rosy the Robot from the Jetsons.

All the devil’s details about future AI are in these questions about inference and a machine’s ability to reason and draw conclusions based on evidence. It’s a powerful framework within which to analyze the field. What this question reveals about AI, both past and present, is nothing short of a major mystery about getting to artificial general intelligence — not a steady path towards success, as futurists always claim.

If you look closely enough at inference, you realize that we don’t yet have a clue.

AI Hype Factories

It is a myth that genuine machine smarts are on their way and even imminent.” – @ErikJLarson

It is a myth that genuine machine smarts are on their way and even imminent. And this inevitability

myth about AI promoted by futurists and media, and accepted by too many in the public is part of a larger problem I see with buying into scientific hype about the future.

In the second part of The Myth, I address the very real dangers stemming from AI hype. We don’t know what the future will bring, of course, and pretending otherwise — like pretending about serious things generally— is a bad idea.

AI needs innovation, just as science and culture do. And by taking the futurist perspective on AI seriously, we risk downplaying the continued need for innovation, while endlessly talking up the intelligence and potential of machines. So in The Myth, I unpack this falsehood and explore our increasingly mechanistic worldview — of which talk about AI is only the tip of the spear. We will need our own human smarts if we are to not only forge paths into the future but to make this future a place we want to call our own.

I hope you enjoy the book.